At the backend of almost years of creative tooling work with Substance 3D Modeler and Dreams I am brain dumping lessons learnt and this week I want to talk about what the actual data handcuffs are. In a later article I will talk about higher level parts of this problem and solutions but today let’s talk about the true data handcuffs or limitations: HARDWARE!

Conclusion at the top as always to save you the time:

- Buffered Grids of Pixels dominates visual display tech

- Audio waveforms dominate to an even greater extent

- Their reign is relatively new. See old AV tech.

- Special cases still exists but backpressure from dominate formats stagnate our tool pipeline

- Small evolutions are happening, see some changes for VR

- VR makes consistent depth buffer mandatory

The Audio Visual King

It is safe to say the bulk of creative content which is consumed today, towards the order of high nineties percentile is Audio Visual data which is expressed by oscillating a speaker and displaying a grid of pixels for viewing. This is a fairly constrained creative bottleneck enforced by high degrees of technical entropy which would take a massive cultural and capital investment to shift.

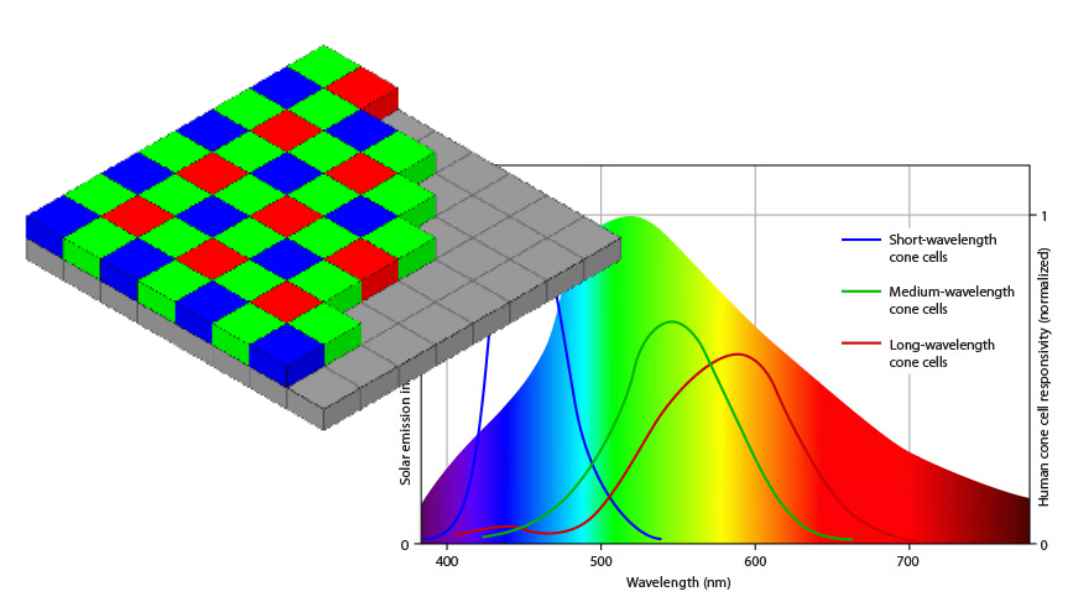

These data formats, while influenced by our biology, see Bayer Filter encoding, are not innate to our biology. Even our experience of sound involves more than just our ears detecting air pressure modifications. Though for the most part we can agree the AV format rules the world.

It should not be forgotten though that other targets exist with their own limitations. Print media for example is still a massive part of society. By volume of unique creative output it is relatively low but I guarantee you have seen at least one billboard, book, magazine, or flyer today.

This was not a foregone conclusion; our audio format was dominated by sheet music far longer than the current waveform technology has existed. For a long time the early wax cylinder was outdone by digital sheet music in the form of mechanical player pianos and kin. With bespoke mechanical music boxes bring their own unique and non-standard method for audio encoding.

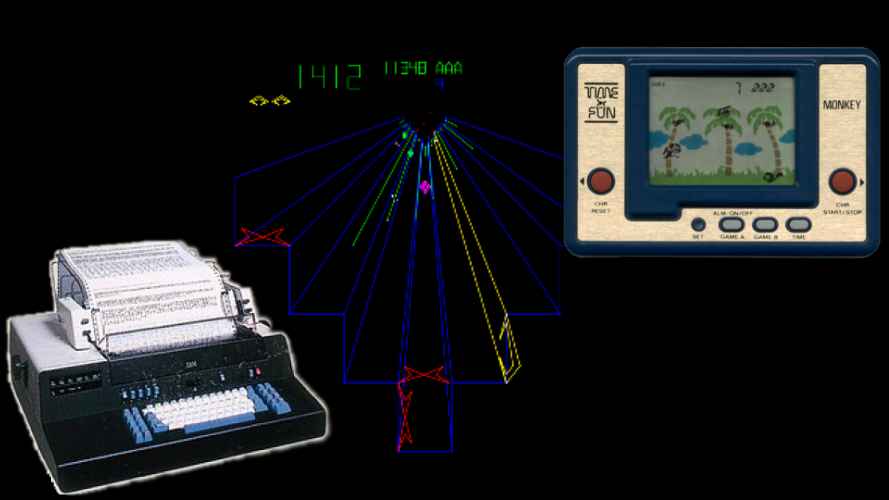

Likewise the visual format evolved a lot in my lifetime despite seemingly being locked in time. We moved away from crystals on film for example. Vector displays with their high contrast monochrome were in the arcades of my youth,. I remember playing handheld gaming devices using LCD segment displays fondly. I even recall a hospital using a typewriter style display in its admin department though old even then.

The point is AV waveform pixel grid format is dominant. It is the king of today, long live the king.

Driver Entropy

While not a law of nature the path of least resistance means even new methods of display tech will conform at a driver level towards traditional formats. The most concrete example of this is the VSync being a hold over from the once dominant CRT display.

I recall enthusiastically coding for my Pebble Watch because they had made the bold decision to expose per line updates because that is how the hardware worked and battery drain of flipping a line was noticeable on the always on eInk style display. Many modern handheld and wearable devices would benefit from this level of optimisation but now we depend on the driver to throw away unneeded pixel updates after we did the hard work of computing them to save on screen cycles.

While still a lovely programming challenge the Pebble was eventually killed by Apple watch and other full colour watch displays. Those at the driver level were performing these kinds of optimisations but from a software point of view were still mostly the standard double buffered grid of pixels we see on every other display technology.

The point is that our software ecosystem has become so well established that even were a new display format to emerge it would likely be constrained to traditional display paradigms and only survive if it can outperform existing tech using old formats, even if it was superior in other non standard ways.

Wonderful World of Special Cases vs Tools Ecosystem

Much like wearable devices provided a strong limit with battery power leading to innovation in the display space. There are other weird special case situations. Museum or installation pieces. Advertising or limited use displays doing projection mapping or unique updates.

Mechanical printing, be it additive 3d printers, traditional 2d plotters or a manufacturing process which provides an economic or material advantage. There are special case outputs in the wild worth paying attention to. MIDI music, the digital holdover from those self playing pianos still can power physical instruments like a robot. So unique use cases exist.

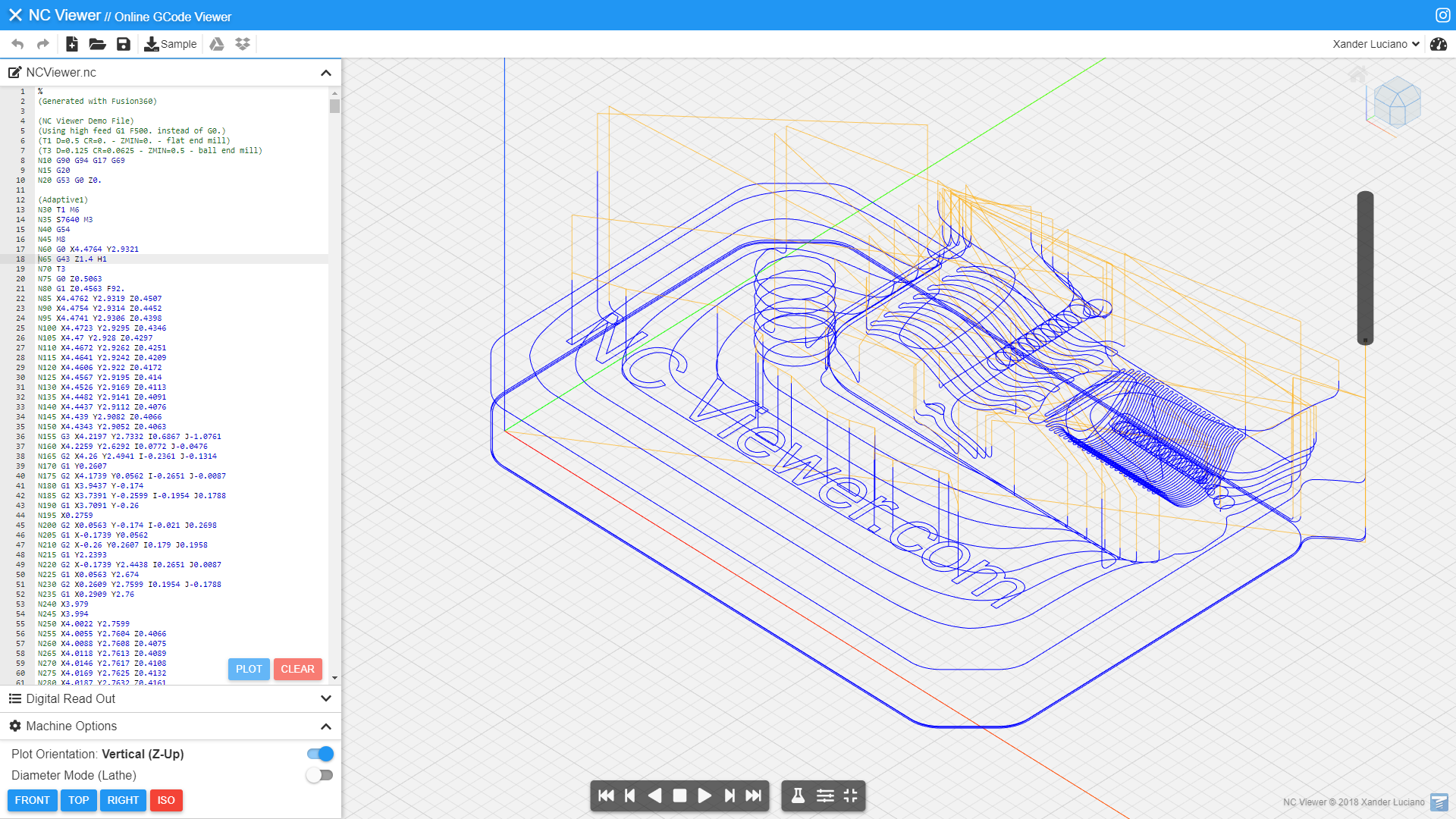

The bulk of things printed on addictive printers are 3d models from traditional tool pipelines then fed into slicer software to convert the volume into GCode or similar format, basically a list of vector lines in space with tool configuration changes. There is a real opportunity to produce that data from entirely different pipelines. See new manufacturing tech like Incremental Sheet Forming.

The reality though is the back pressure from the AV king keeps our tools pipeline pretty rigid.

Virtual Reality: The king is dead, long live the king

Despite our innovations in spatial audio, and attempts at non standard display formats, the world of XR is basically unchanged from a CRT monitor with a speaker. Though basically unchanged is not the same as unchanged. Cracks are emerging.

The first crack is we are now producing two inherently linked images. Early VR had massive performance issues because we treated these as two unlinked framebuffers. Now with (multiview) in Vulkan and other render API we acknowledge that these are linked and from almost identical spatial positions. Thus allowing massive optimisations in vertex processing and even some fragment processing. This is an area of innovation to keep an eye on.

Likewise the advent of higher contrast displays, predating VR as HDR, are starting to move into the space requiring higher bit depth. At the same time offloading rendering to a disconnected device be it in home streaming or cloud streaming is becoming more popular which pushes against these higher bit rates.

With the release of OpenXR 1.1 the quad image format championed by Varjo is now part of the standard. This basically means four image buffers: two low resolution and two high resolution images. In some headsets this will be physically separate displays like the Varjo, in others it will be software mapping to foveated rendering powered by eye tracking.

Finally perhaps the biggest change to the format and discussion is the depth buffer. Longtime artefact of 3d rendering we have never, outside of fringe use cases, needed to present to display hardware. Though in a world of synthetic frames and ultrafast framerates it is now a must to present a depth buffer to headsets. This is the biggest visual presentation change in a while.

Wrap it up

I want to talk about how this pipeline pressure manifests in our tool chains, what is fixed and what isn’t, but this was the end of the pipeline. Mostly stable but some things are changing with unique uses cases which can’t be ignored. Again the takeaways from the start of the article:

- Buffered Grids of Pixels dominates visual display tech

- Audio waveforms dominate to an even greater extent

- Their reign is relatively new. See old AV tech.

- Special cases still exists but backpressure from dominate formats stagnate our tool pipeline

- Small evolutions are happening, see some changes for VR

- VR makes consistent depth buffer mandatory

If you made it to the bottom please tell me on your platform of choice what your favourite retro or special case display or audio tech is. I love hearing about them.

Questions or feedback to me on Twitter or Mastodon please.

Also a reminder that the RSS Feed is still a thing.