As part of my brain dump series on writing creative tools off the back of Substance 3D Modeler and Dreams I want to talk about WHY I was working on creative tools. Back in 2014 when I first interviewed at PlayStation with hope of being involved in the Morpheus Project, a wire basket VR prototype I had started developing prototypes for as a 3rd party developer at Climax and would later become PlayStation VR 1, the word which was conspicuous was Metaverse.

Normally I would present the conclusions at the start of the article but this is really the tale of the why and how I got sucked into building creative tools. For the more technical takeaway articles come back next week.

The word metaverse is poisoned now, it wasn’t in 2014. I was applying to join the Online Technology Group, just the kind of nerdy group thinking deeply about networked worlds. As a Stephenson fan, I am unsure if it was I or Richard Lee who first used the word in the interview. It was being thought about by a lot of nerds chipping away at the problem over decades. Two visionaries at Sony who had it on their mind were Richard Lee, the CTO for Sony's internal first party studios and Shuhei Yoshida who was in charge of the division called World Wide Studios at the time.

I was enamoured with Virtual Reality, my previous obsession which I had chased for about a decade was semantic webs and dialogue trees, another story. Both share a common desire of social storytelling and play the power of simulation and alternate realities. These are highfalutin terms and not grounded in how you build things and the real problems but they inform the why. The purpose sharpens the knife of technical choices allowing you to make leaps not obvious to others.

Both Richard and Shu had the kind of long term vision which you dream for in your leadership. I spoke at length about metaverse, lobbies and the problems an interconnected simulation would entail. I dug into the job doing many things but a mainline thread was spawned one day when Richard called my desk, as he was wont to do, with a question.

What does multiplayer look like in Virtual Reality?

This spawned a whole range of discussions but had been started by a discussion with Shu and Richard. It led to a few patents, an internal RnD project, a GDC talk and some real foundational stuff. PlayStation was no stranger to the space, having launched PlayStation Home on the PS3 which was successful. The external perception was of course out of line, but having seen the numbers it did well. I cannot talk about the details of why that project needed to be shut down but we were in the process of getting ready for it. The best summation of the problem I can give which drills to the core of the problem was twofold: the person driving the project had left and the content desert.

Networking is the primary problem

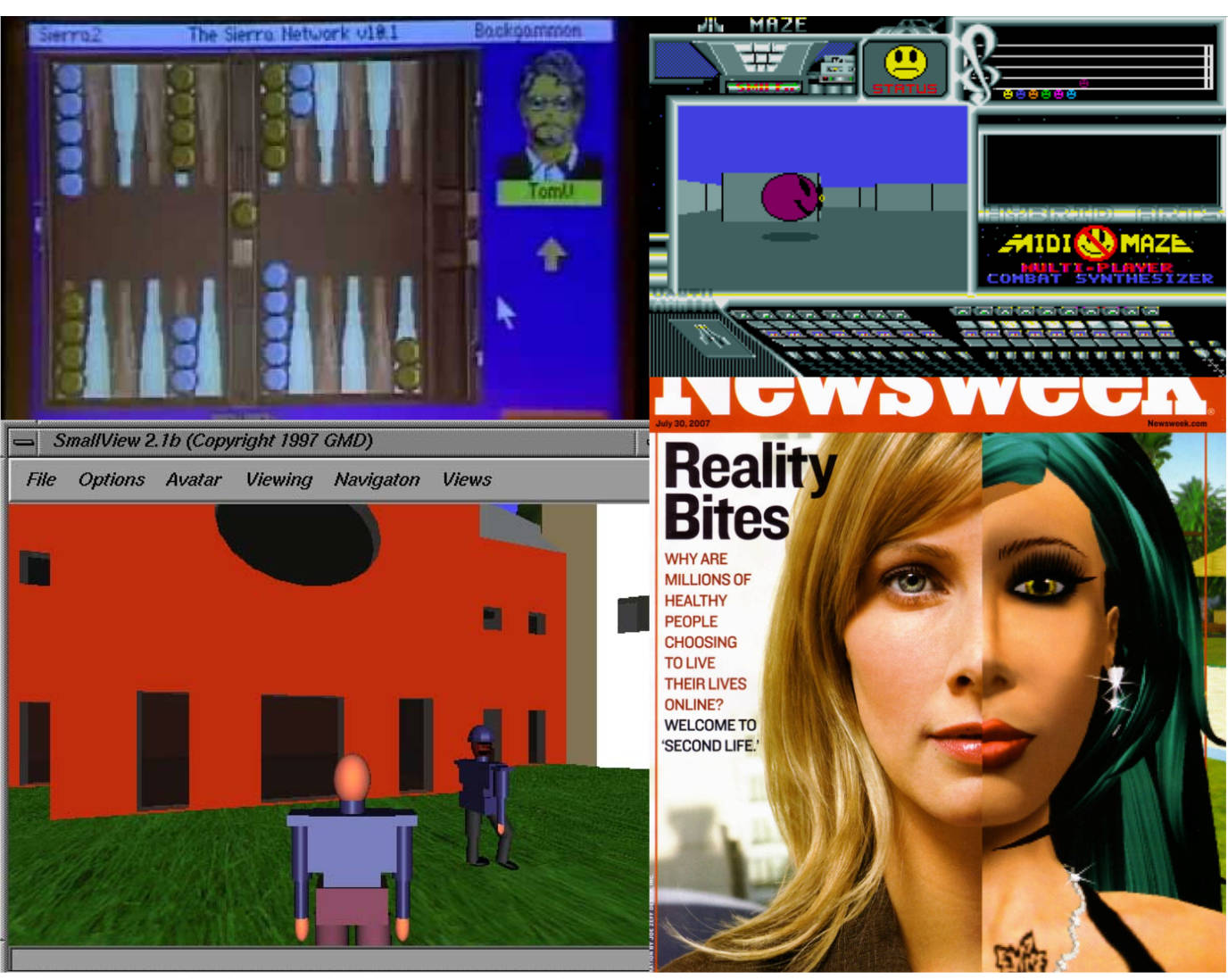

Those who speak the words Virtual Reality and Metaverse in the same sentence are often short- sighted these days but we were not. In fact most people I know really in the space of building connected network spaces know their history, at least those who are successful. I shan’t recant it all here but if you're not familiar with PlayStation Home, Second Life, VRML, Muds, Quake world, The Sierra Network and MIDI Maze they are all required reading.

I hate networking code, so why did I spend a large part of my life writing it? Play is social and networked computers allow a magic that solo play can never match. Even the best single player stories are elevated through social discussion and sharing those experiences. At the time I was also working deeply with YouTube and Twitch as part of PlayStation for the same reason. Networking is hard, but so rewarding. Connectivity matters.

Going from serial networking of MIDI Maze, through the lag jumps of Duke 3D and Quake into the modern prediction and rollback of modern Call of Duty coping with some of the worst packet drop ever during the PS4 era of bad Wi-Fidecisions, crowded air space and Wi-Fi specifications arguments. Tons of teams are improving the low level layers and high level trickery for hiding the limitations of physics.

I was speaking to people involved in PlayStation Now who are the best and most established cloud gaming solution. People forget about it but when I see new players hype out their solution, such as Google and other tech bros, I know the numbers from that service. It works but it's a HARD problem. So cloud gaming was being worked on by some of the smartest people.

Networking optimisation and trickery are key to even non obvious issues like voice chat. While it has a large hardware component, and audio processing which should not be understated for the Metaverse. The hardest issue was solving networking those voices, especially with spatial audio at low latency and resolving the audio mixes in interactive spaces. Sound is slower than light, but we are more sensitive to audio latency. Another networking problem that a co-worker was already solving.

I was confident the difficult networking problems had the best people, money and motivation.

Problem everyone was ignoring

At the time I was doing this work the space was heating up fast and lots of players. Many people saw the dream bringing different levels of experience. Though looking through the space, and reflecting on the problem of Home and the bare bones tech demos being produced by the new tech companies. Remembering fondly and awkwardly my time spent in Second Life but also the complexity we lost when moving from player run text based Muds to the Everquests and Theme Park MMO world, it was all about one word: Content.

Go back and read those big Second Life articles, Content Creators were always in the spotlight. Having worked at Jagex and being in the MMO space, content treadmill and cost of content was a constant topic of conversation. If you look at the most successful metaverse, VRChat, it is driven by a powerful creator economy. In 2014 VRChat was mostly a curious small player. It would be years till the Knuckle and Steam user explosions.

My consulting job and eSports work had me working with YouTube and Twitch deep in the social space and examining the explosion of the web from text to digital photography to video and finally to broadcast the content pipe was exploding. The problem is to inhabit a social connective space you want a 3d world which means you need 3d content which is rigged and animated to be as easy as video to make. That is a HUGE gap.

The User Generated Created Content

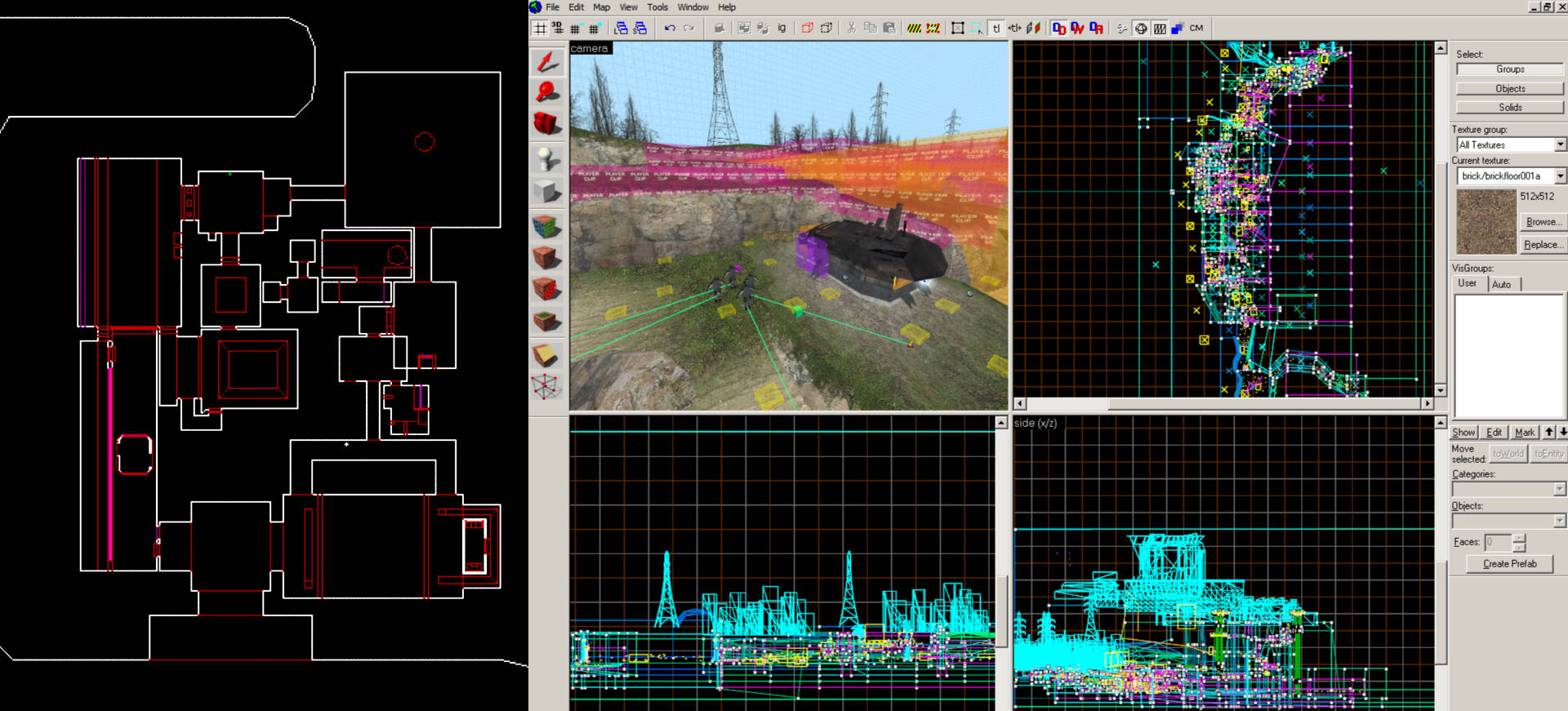

The gaming term UGC is now commonplace but it is still very new to the space. While things like Pinball Construction Set have made level design possible for a long time, Build Engine made 3d level design accessible to the masses with its flat maps. Then as we moved to Hammer and Unreal Editor the ramp turned into a cliff. We lose people the moment we need to work in true 3d.

I’ve loved playing with 3d Studio as it grew into Max, programs like Poser and Bryce and the early explosion of innovation in the space. I grew up with these tools but I wasn’t seeing the explosion of 3d content that was needed to power the Metaverse.

The creative tools in games had been getting less powerful not more. More restrictive than the golden 90s mod era of PC gaming. The exception of course was Little Big Planet. The 2008 powerhouse which was 2.5d in nature and created 3d worlds with simple layers and powerful tools which redefined the conversation. Not long after Minecraft started development, remind me to write about the funny Jagex story there. We saw a ton of people exploding in their 3d creativity with the lego brick mindset that voxels enabled for people.

Well Alex Evans was a frequent visitor to the London office and watching early Dreams dev I basically turned around to Shu and Richard and said: Dreams is the answer!

The Dream Solution

If you have a content problem then Dreams is the solution.

I want to write another piece about surfaces vs volumes and various technical hurdles to clean up but I will summarise them here in my perceived order of importance for the Metaverse.

- Performance is hard to predict and scale

- Traditional Tools are filled with arcane knowledge

- Building 3d content with 2d tools

- Game Code is Network Code

- Topology and UV Space

- Rigging and Animation

How does Dreams solve these problems?

Performance

You can see this in VRChat and many other platforms with custom created avatars, not generated from sliders but handmade, it is hard to predict the performance of them. This extends out to loading in levels, random props etc…

Dreams levels were tiny, with the exception of audio samples, the entire dataset was delta based and built up from an optimised storage of the strokes and actions which make the thing. Leading to file sizes orders of magnitude less than more traditional levels.

Little Big Planet is shocking in how well it scales and Dreams even more so. While there were performance issues with some of the sculpting at that time the performance scalability of signed distance fields was insane. Gaussian splats are now all the rage but are quite old tech, as are voxels. The particular mix that Dreams opted for was very scalable and most of the limits were based around memory which was predictable and easy to scale.

Arcane Knowledge

Dreams gave us a chance to re-examine every step of the creative process.

While many could accuse Dreams of having its own store of weird knowledge you needed to acquire very little of it was required to make things. As Kareem explained, it was a musical instrument. The basic chords could be learnt very quickly but the mastery would take your lifetime.

The issue with traditional 3d pipelines is it requires an apprenticeship or consulting ancient tomes to know how topology affects rigging and skinning optimally. Our current pipeline is not designed from first principles but rather evolved from layers of interoperability and compromise. Trends started before personal computers still today define the modern art pipeline.

3D Tools

Before VR, the thing which started the 10 year journey of Dreams was the Move Controllers when AntonK made the first block prototype. It was driven by the fact you had the first true 3d tools in a consumer device. 6 DOF tracked controllers even without a VR headset transform the problem space. Bringing it more into the range of giving a child coloured putty to sculpt with.

Now it was obvious that VR was going to be a big part of Dreams' future.

Network Code

The fact that Tim Sweeny set out to write a new functional programming language for UEFN called Verse made me certain he was taking the problem of the Metaverse seriously because as any network programmer will tell you the real problem is that ALL logic is networked. So often networking bugs were from programmers higher up the stack not thinking how their state works over a network. The halting problem and various nightmares exist especially in a low trust environment with user made content. Functional programming is an obvious step for those who study the problem but functional programming in text is hard to explain.

It turns out that analog circuits and visual scripting however maps well to the problem space as well. Redstone and LBP/Dreams gadgets are large grids of logic which can be evaluated in a network friendly manner when built correctly.

UV and Polygon Problem

Triangles are fast. They have basic maths properties and years of hardware investment which mean that it is unlikely to change in the near future. That being said, the final GPU representation should not dictate the creative process.

In the days of Quake going from 150ish triangles, to 300ish Quake 2 up to 1500 triangles in Quake 3, it used to be true that triangles matter. At the start of the PS4 era we were talking 100k for typical character models this is not the same conversation.

Texture space used to be 128x128 pixels but now we use multiple HD maps for a single character. With often the shader of material work making more of an impact with things like self shadow, ambient occlusion removing a lot of the custom texture work from past generations.

Topology was likewise critical when elbows bent like a bad paper straw but now complex rigs with secondary support for muscles and morph groups mean the game has changed.

There are better ways to solve both surfacing and animation of volumes. Dreams had solutions to these and while I have since seen better solutions Dreams made the bold choice to not have textures and not care about topology.

Rigging and Animation

Rigging and animation are another arcane art which is both fragile and loaded with arcane knowledge. Knowing how UV scrolling, topology affects rigging and then losing all of the work because the animation retargeting process, a modern lifesaver, didn’t work and you need to clean up after losing or corrupting months of animations.

This final stage of the process is often curtailed, time pressured and limited by earlier decisions. Also animation of a 3d object with 2d tools is even harder than static 3d content as an entire new dimension is added to the problem.

Dreams was approaching this from day one with a mind towards puppetry and accessible animation. That combined with early mocap work I had seen coming out of the early Vive/Lighthouse system showed huge promise. This is also after working with Computer Vision on earlier projects and knowing there were better ways to do it.

End of the Dream

One day there will be a book written, hopefully not by me but by those deeper into the history of the project. Though I have thoughts. Time has healed a lot of wounds and ultimately a great game was made and you can still play it today.

PSVR2 and PS5… I feel the most regret here. I tried and the fact it is forever locked to PSVR1 still hurts.

PC Version and Export… would have solved my Dream content problem.

Networking and Metaverse…. We tried.

Why aren’t you still building them?

Dreams was wild. It was like a rock tour, though more on the scale of a massive world tour of a successful band doing their new album with all the baggage that entails. It was hard but it was a rollercoaster and while it hurt it suited my temperament. Almost everyone I speak to in the creative space has heard of Dreams so I know we made the splash where it matters. We showed the way.

I wrote about why I thought now was the time to leave in Adobe the first article of the series.

So is content still the biggest problem…

When I joined the Modeller team the ocean was blue and the space wide open. I feared with the oncoming death of Dreams the magic would be lost before we could change the creative workflow. Through the last four years we have seen an explosion of tools. Things like Gravity Sketch, OpenBrush, Uniform, MagicaCSG, WOMP and Unbound.io made me sure the space would now evolve. Voxels, SDF, Nerfs, Surfels, Splats and other modern reps are being discussed and shipped in big software stacks.

Finally the big changer of AI and generative 3D, which I will definitely write about soon.

I wanted to make 3d Content easier to make because I wanted richer digital worlds.

The solution is not here yet, but I’m confident that it will be soon and that I’m not the best person to work on it. Just like I knew I wasn’t the best person to work on the networking side of the problem.

I know this is a less technical, more long form article but I thought it was important to outline because it will inform some of the technical points I make in future articles. As I said, the why is a knife which lets you cut through to the correct decisions.